文章正文

文章正文

Title: -Generated English Subtitles: Achieving Automatic Matching and Synchronization in Animation

In the digital age, the rapid advancement of artificial intelligence () has revolutionized various industries, including entertnment, education, and marketing. One such innovation is the 配音生成 ( voice generation) technology, which has gned immense popularity due to its efficiency, convenience, and versatility. This article explores how can generate English animation subtitles and achieve automatic matching and synchronization, making the process smoother and more accessible for content creators.

### Introduction to Voice Generation and Animation

配音生成 technology has enabled the creation of high-quality voiceovers that can be tlored to suit various lications, such as video games, animations, and e-learning modules. With the advent of advanced algorithms and machine learning, these tools have become increasingly sophisticated, providing users with a diverse range of voices and languages.

Animation, on the other hand, is a dynamic medium that combines visuals and audio to tell a story or convey a message. With the growing demand for engaging and interactive content, the need for efficient subtitle generation has become more critical than ever.

### The Need for English Subtitles in Animation

English subtitles play a crucial role in enhancing the accessibility and comprehension of animated content. They help viewers understand the dialogue and context, especially in cases where the audio quality is poor or when the content is intended for a non-native English-speaking audience. Subtitles also serve as a valuable resource for individuals with hearing imprments, ensuring that they can enjoy and understand the content.

### -Generated Subtitles: A Breakthrough in Animation Production

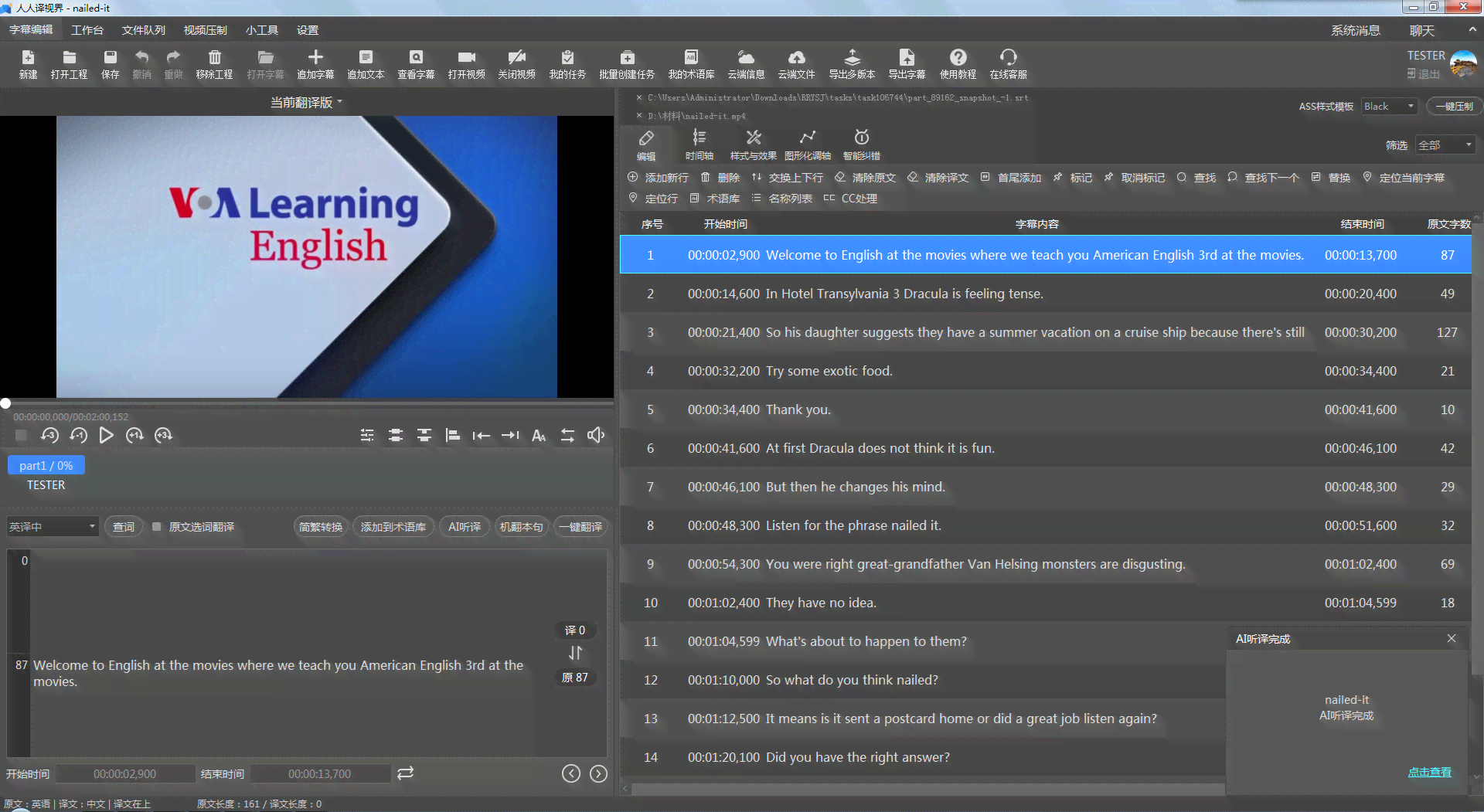

-generated English subtitles have emerged as a game-changer in the animation industry. These tools leverage natural language processing (NLP) and machine learning algorithms to automatically transcribe and translate spoken words into text, which can then be synchronized with the animation.

Here's a step-by-step guide on how achieves automatic matching and synchronization of English subtitles in animation:

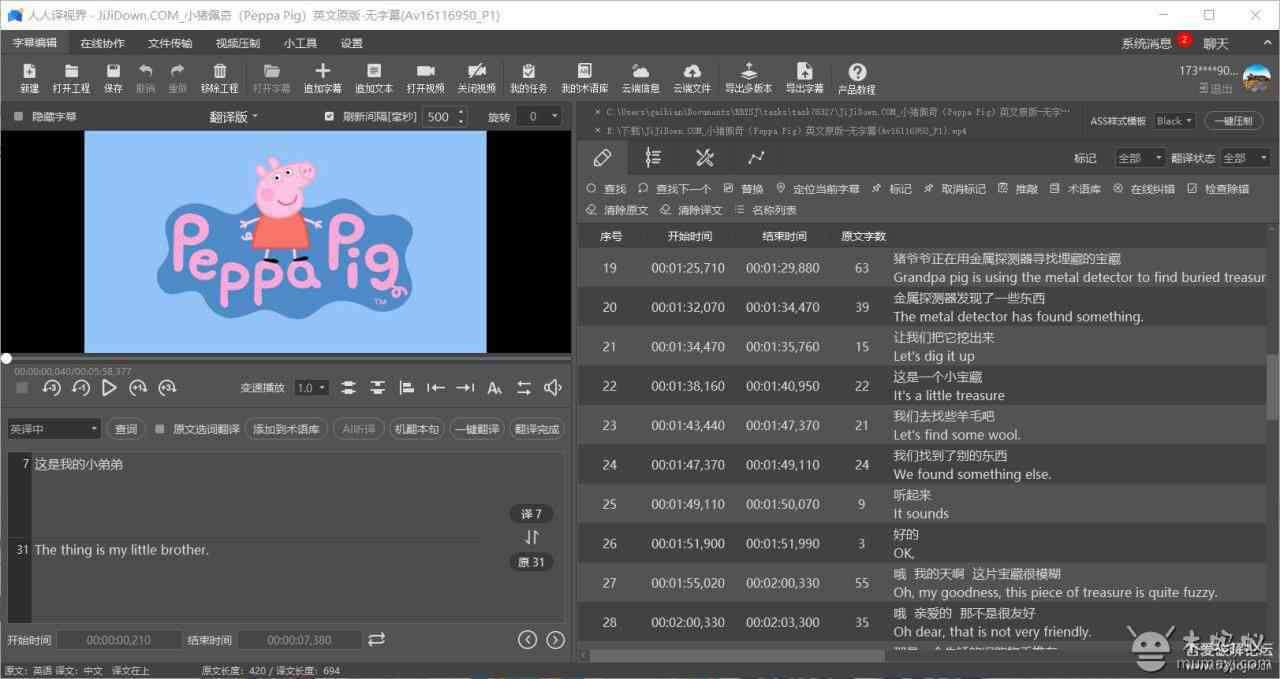

#### 1. Speech Recognition and Transcription

The first step involves the tool recognizing and transcribing the spoken words in the animation. This process is facilitated by advanced speech recognition algorithms that can accurately identify and convert audio into written text. The transcription is usually done in real-time, ensuring that the subtitles match the spoken words precisely.

#### 2. Language Processing and Translation

Once the transcription is complete, the tool employs natural language processing techniques to translate the text into English. This step is crucial for ensuring that the subtitles are accurate and contextually relevant. The algorithms analyze the grammar, syntax, and semantics of the original language to generate an accurate English translation.

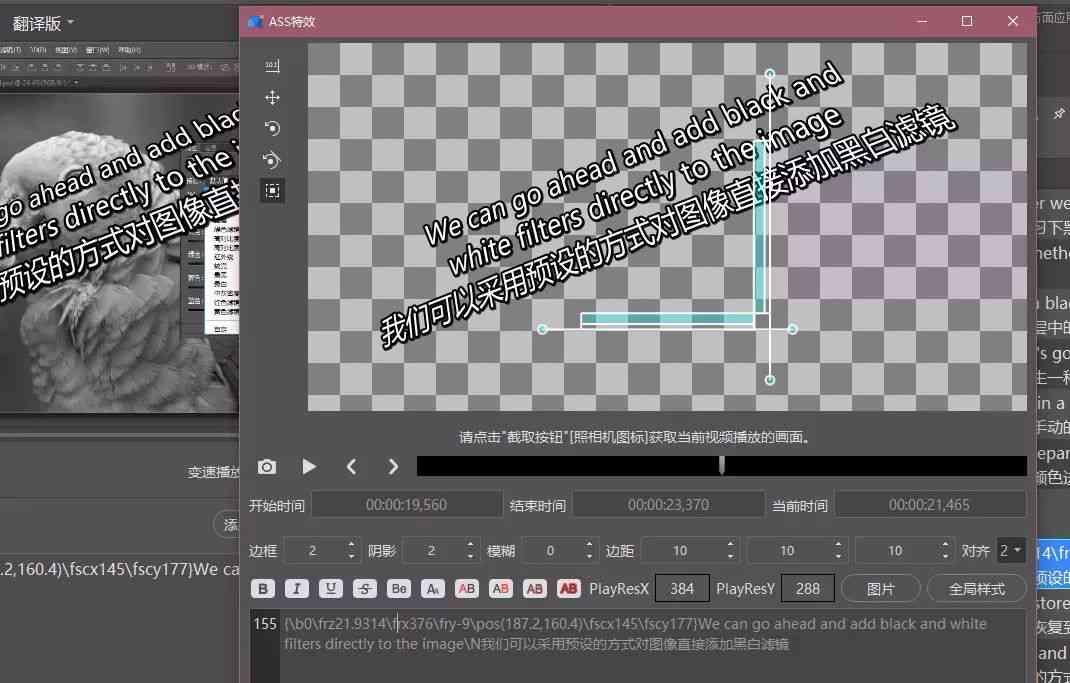

#### 3. Synchronization and Timing

The tool then synchronizes the English subtitles with the animation. This involves aligning the text with the spoken words and ensuring that the timing is accurate. The tool uses machine learning algorithms to predict the duration of each spoken word and adjusts the subtitle timing accordingly. This ensures that the subtitles ear and disear at the right moments, enhancing the viewer's experience.

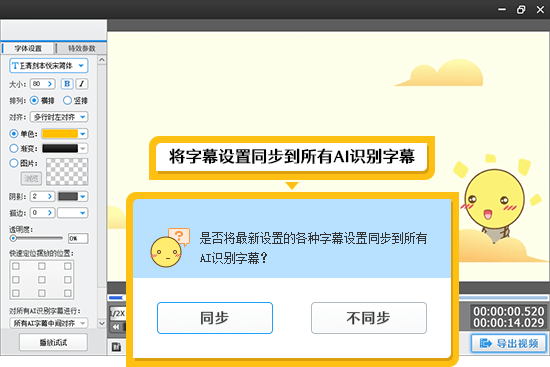

#### 4. Formatting and Styling

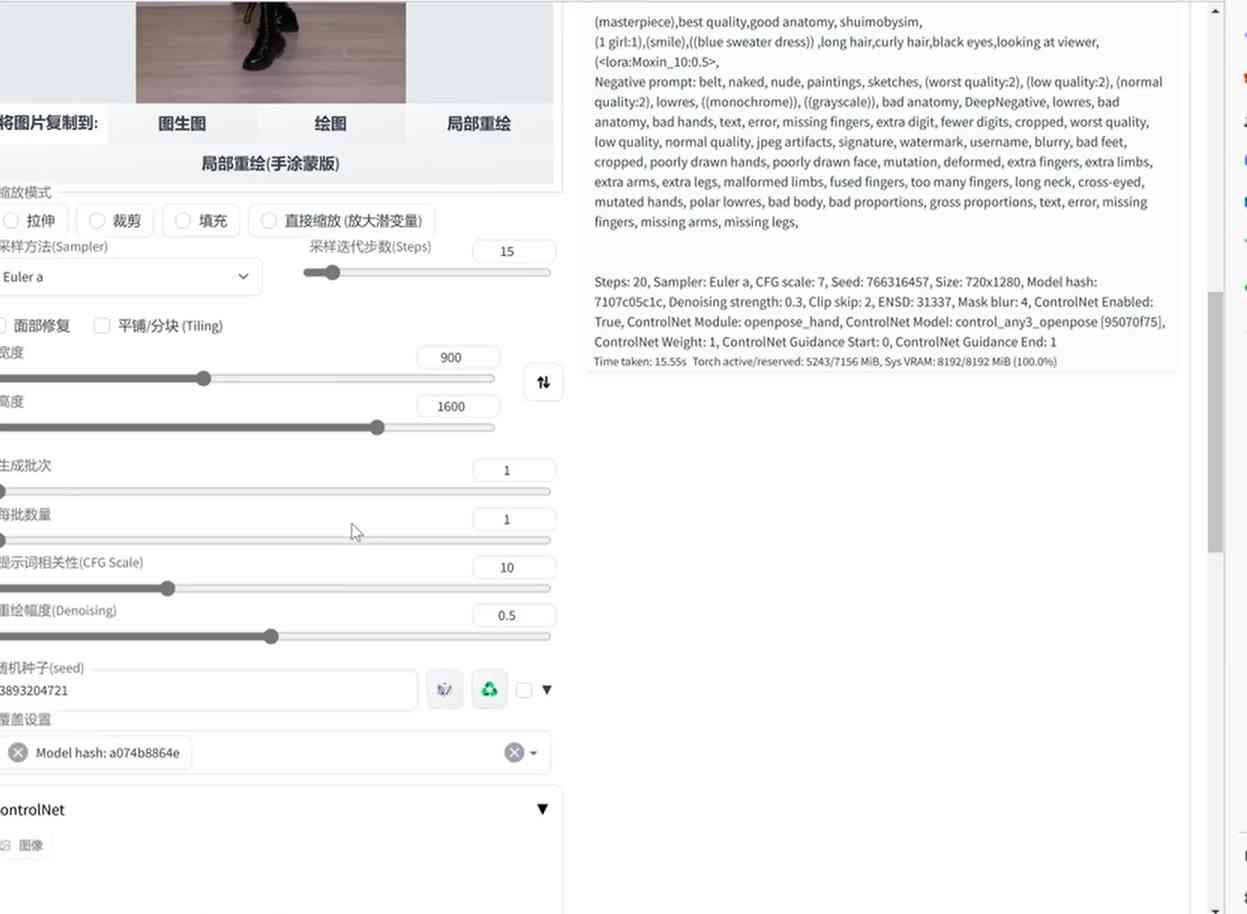

After synchronization, the tool formats and styles the subtitles to match the overall look and feel of the animation. This includes adjusting the font size, color, and position of the text to ensure that it is easily readable and visually ealing.

### Case Study: -Generated Subtitles in Practice

Let's consider an example where an tool like AnimateDiff or Stable Diffusion is used to generate English subtitles for an animation. Assume the animation is six minutes and 39 seconds long, and the tool has been trned to recognize and transcribe spoken words accurately.

1. Speech Recognition: The tool transcribes the spoken words in the animation, ensuring that each word is captured accurately.

2. Language Processing: The tool then translates the transcribed text into English, taking into account the context and nuances of the original language.

3. Synchronization: The algorithms synchronize the English subtitles with the animation, adjusting the timing to match the spoken words.

4. Formatting: The tool formats the subtitles to match the animation's visual style, ensuring that they are easily readable and visually ealing.

### Conclusion

-generated English subtitles have transformed the animation industry by providing a fast, efficient, and accurate way to add text to animated content. By leveraging advanced speech recognition, language processing, and synchronization techniques, tools can automatically match and synchronize subtitles with animations, saving time and effort for content creators.

As technology continues to evolve, we can expect even more sophisticated tools that can generate accurate and contextually relevant subtitles in multiple languages. This will further enhance the accessibility and reach of animated content, making it more engaging and inclusive for diverse audiences.